A new paper is added to the collection of reproducible documents: Simulating propagation of separated wave modes in general anisotropic media, Part II: qS-wave propagators

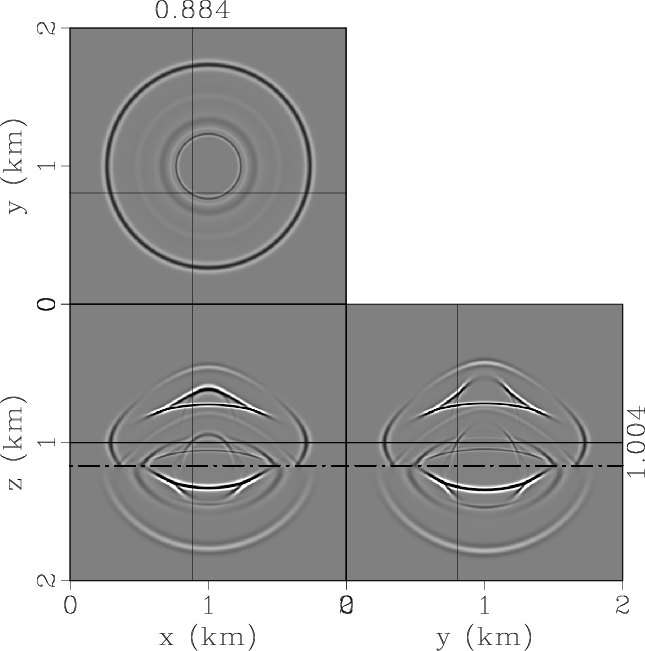

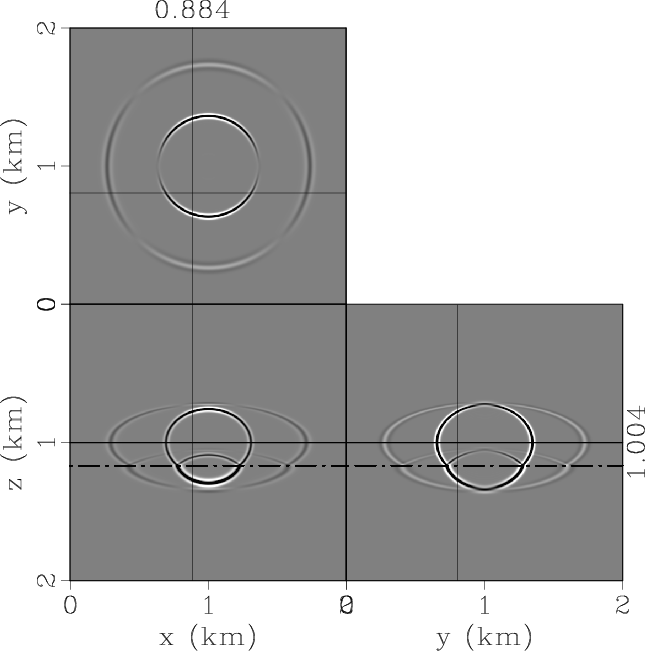

Shear waves, especially converted modes in multicomponent seismic data, provide significant information that allows better delineation of geological structures and characterization of petroleum reservoirs. Seismic imaging and inversion based upon the elastic wave equation involve high computational cost and many challenges in decoupling the wave modes and estimating so many model parameters. For transversely isotropic media, shear waves can be designated as pure SH and quasi-SV modes. Through two different similarity transformations to the Christoffel equation aiming to project the vector displacement wavefields onto the isotropic references of the polarization directions, we derive simplified second-order systems (i.e., pseudo-pure-mode wave equations) for SH- and qSV-waves, respectively. The first system propagates a vector wavefield with two horizontal components, of which the summation produces pure-mode scalar SH-wave data, while the second propagates a vector wavefield with a summed horizontal component and a vertical component, of which the final summation produces a scalar field dominated by qSV-waves in energy. The simulated SH- or qSV-wave has the same kinematics as its counterpart in the elastic wavefield. As explained in our previous paper (part I), we can obtain completely separated scalar qSV-wave fields after spatial filtering the pseudo-pure-mode qSV-wave fields. Synthetic examples demonstrate that these wave propagators provide efficient and flexible tools for qS-wave extrapolation in general transversely isotropic media.